iPhone will soon be able to replicate your voice after just 15 minutes of training

FILE - Apple Inc. iPhone 14 smartphones at the companys store in the Gangnam District of Seoul, South Korea, on March 31, 2023. Photographer: SeongJoon Cho/Bloomberg via Getty Images

CUPERTINO, Calif. - Apple this week announced a slew of new accessibility features coming to iPhone and iPad, including one that will replicate your voice after just 15 minutes of audio training.

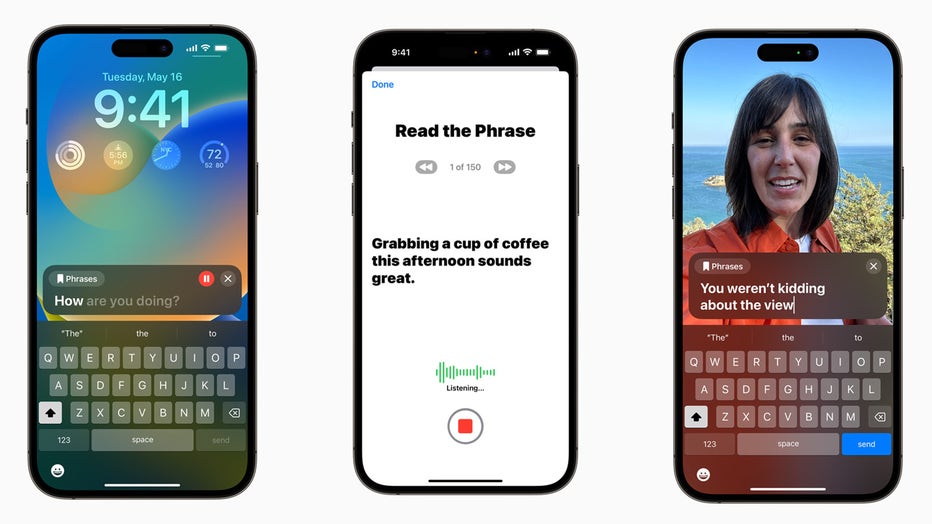

One of the new tools, called "Personal Voice," uses a randomized set of text prompts to record 15 minutes of audio on iPhone or iPad.

It then integrates with another feature, called "Live Speech," in which users can then type what they want to say and have it be spoken out loud during phone and FaceTime calls, as well as in-person conversations, in their own voice.

For now, Personal Voice is only available in English.

Live Speech on iPhone, iPad, and Mac gives users the ability to type what they want to say and have it be spoken out loud during phone and FaceTime calls, as well as in-person conversations. Personal Voice allows users to create a voice that sounds l

In a post previewing the new updates, Apple said the new features were "designed to support millions of people globally who are unable to speak or who have lost their speech over time." This includes users with a recent diagnosis of ALS (amyotrophic lateral sclerosis) or other conditions that can progressively impact speaking ability.

"At the end of the day, the most important thing is being able to communicate with friends and family," Philip Green, board member and ALS advocate at the Team Gleason nonprofit, said in a statement.

Green has experienced "significant changes" to his voice since receiving an ALS diagnosis in 2018, Apple said.

"If you can tell them you love them, in a voice that sounds like you, it makes all the difference in the world — and being able to create your synthetic voice on your iPhone in just 15 minutes is extraordinary," Green added.

The new voice tools also come amid growing concerns of more and more bad actors using "deepfakes," or hyper-realistic photos, videos or audio files manipulated by artificial intelligence (AI), to scam or fool others.

The head of the AI company that makes ChatGPT testified before Congress on Tuesday and said that government intervention "will be critical to mitigate the risks of increasingly powerful" AI systems.

AI should be regulated, ChatGPT chief says

Leaders in the artificial intelligence community are calling for a global agency to regulate the rise of AI. "It can go quite wrong," the head of ChatGPT told members of Congress at a hearing on May 16.

RELATED: AI tools present political peril in 2024 with threat to mislead voters: 'Not prepared for this'

Apple said the new voice feature will use machine learning, which is a type of AI, on the device itself "to keep users’ information private and secure."

In addition to the voice tool, Apple also announced Assistive Access – which combines the popular apps like Phone, FaceTime, Messages, Camera, Photos, and Music, into a single Calls app. The feature offers high contrast buttons and large text labels, as well as other tools to help "lighten cognitive load."

In one example, Apple said Messages will include an emoji-only keyboard for those who prefer to communicate visually. Additionally, users can choose between a grid-based layout for their Home Screen and apps, or a row-based layout for users who prefer text.

Apple said the new features will roll out later this year.

"Accessibility is part of everything we do at Apple," Sarah Herrlinger, Apple’s senior director of global accessibility policy and initiatives, said in a statement. "These groundbreaking features were designed with feedback from members of disability communities every step of the way, to support a diverse set of users and help people connect in new ways."

RELATED: Apple, Google team up to stop creepy tracking tactics

This story was reported from Cincinnati.